6 Dimensions of GCC AI Maturity: Assessment Framework

Discover how leading GCCs score 42 vs 37 for enterprises on AI maturity. Explore our 6-dimension framework for assessing and accelerating AI readiness.

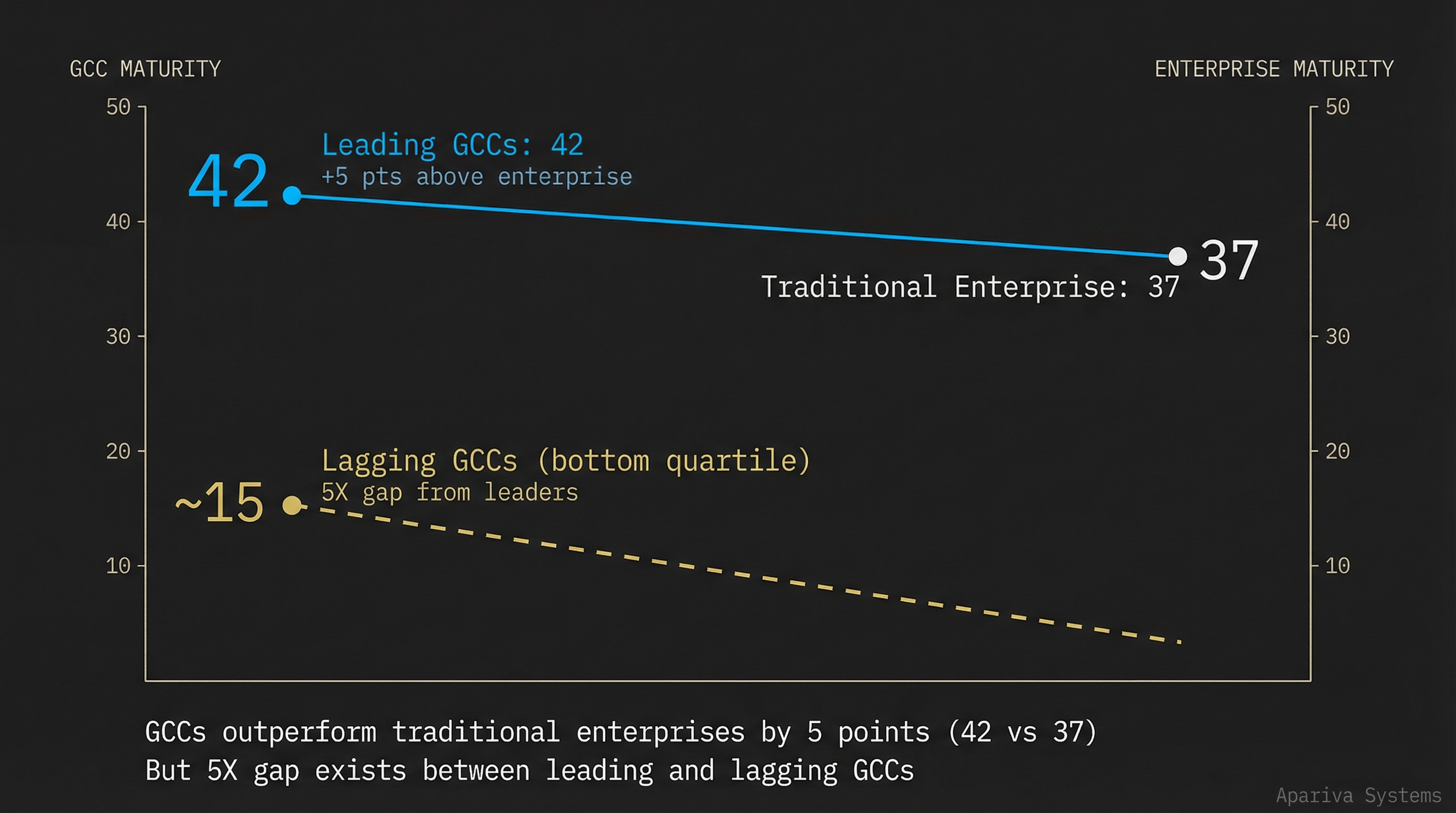

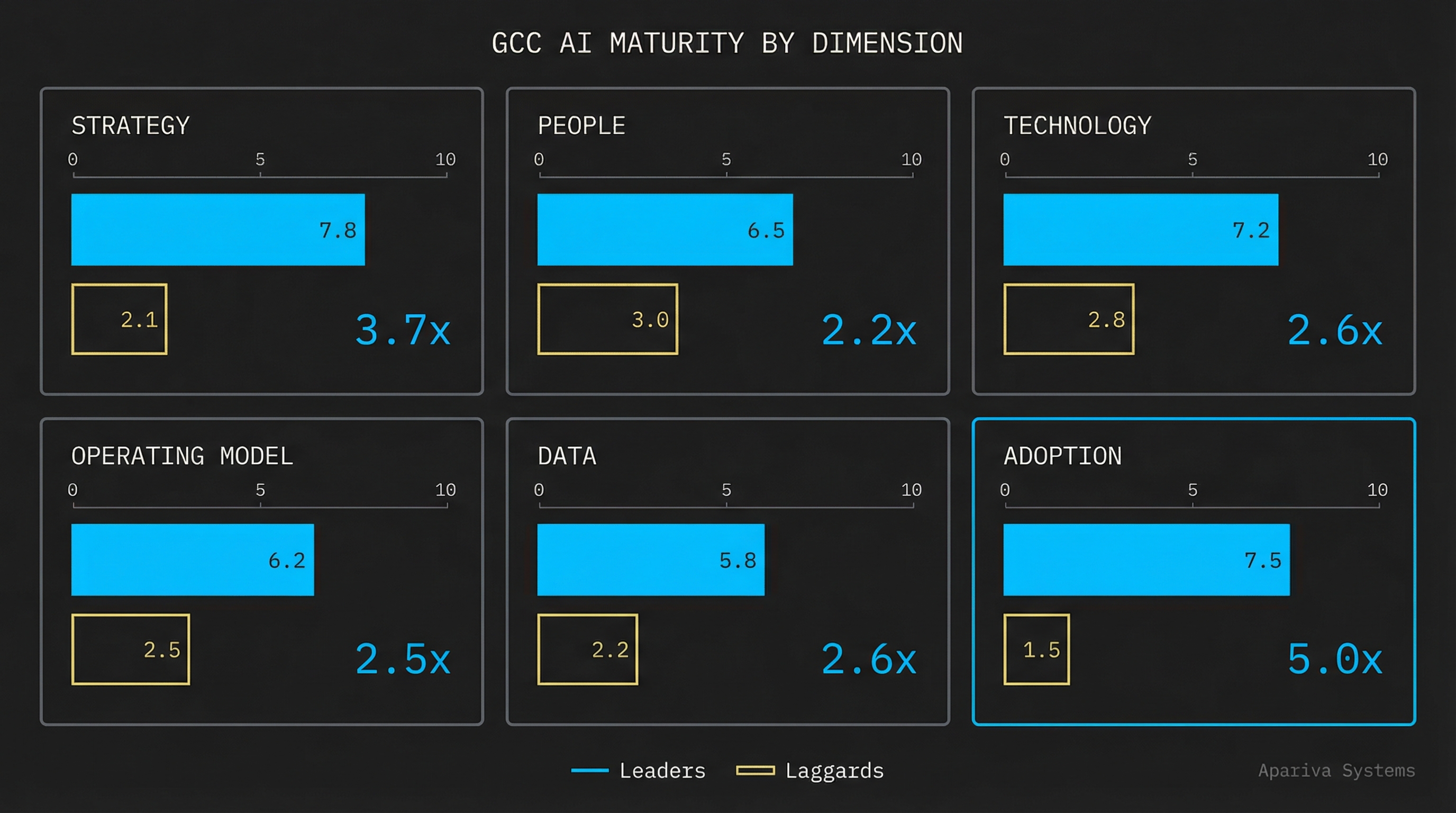

Your Global Capability Center in Bangalore employs 5,000 talented engineers, but when GenAI swept the enterprise world in 2023, the leadership team couldn't answer a simple question: Are we building an AI-native innovation hub, or are we retrofitting AI onto a cost center built for a different era? Recent research across 100+ CXOs revealed a startling 5X maturity gap between leading and lagging GCCs—and that leaders scored 42 vs. just 37 for traditional enterprises. The difference isn't technology access; it's evolutionary readiness across six interconnected dimensions that determine whether your GCC becomes a strategic asset or a liability in the agentic AI era.

The Inflection Point: Why GCCs Lead (or Lag) in AI Maturity

Global Capability Centers stand at a remarkable inflection point. After two decades of evolution from offshore cost centers to strategic innovation hubs, they now face their most significant transformation yet: becoming the primary engines of enterprise AI capability. The data reveals both immense opportunity and critical risk.

Comprehensive research of over 100 CXOs across Financial Services, Technology, Consumer Goods, Healthcare, and Energy uncovered a counterintuitive pattern. While conventional wisdom suggested that parent organizations in developed markets would lead AI adoption, GCCs demonstrated higher AI maturity scores—42 versus 37 for traditional enterprise centers. This advantage stems from structural factors: concentrated talent pools drawing from India's premier institutions (IITs, NITs), innovation-focused mandates unencumbered by decades of legacy systems, and leadership teams empowered to experiment without navigating bureaucratic approval chains.

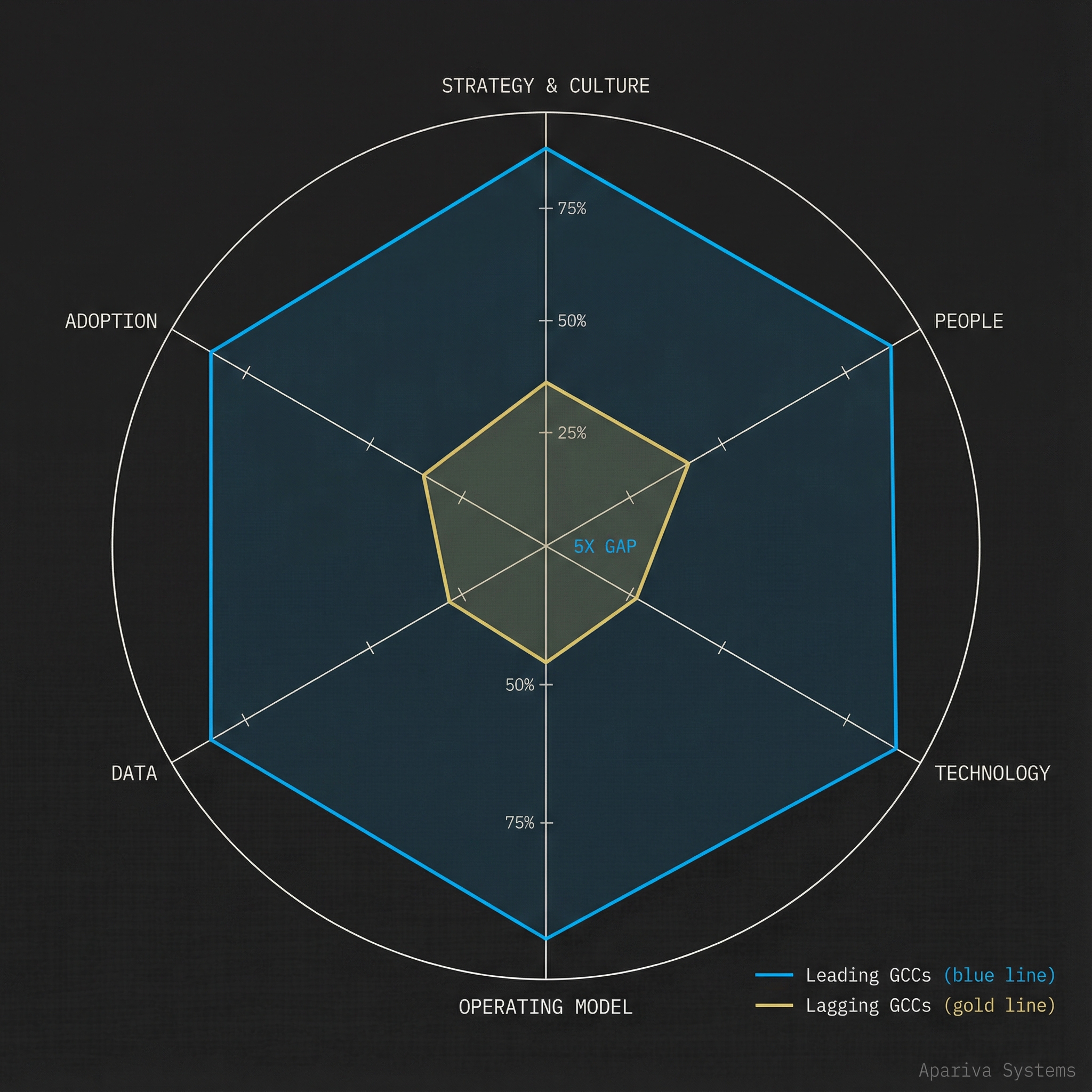

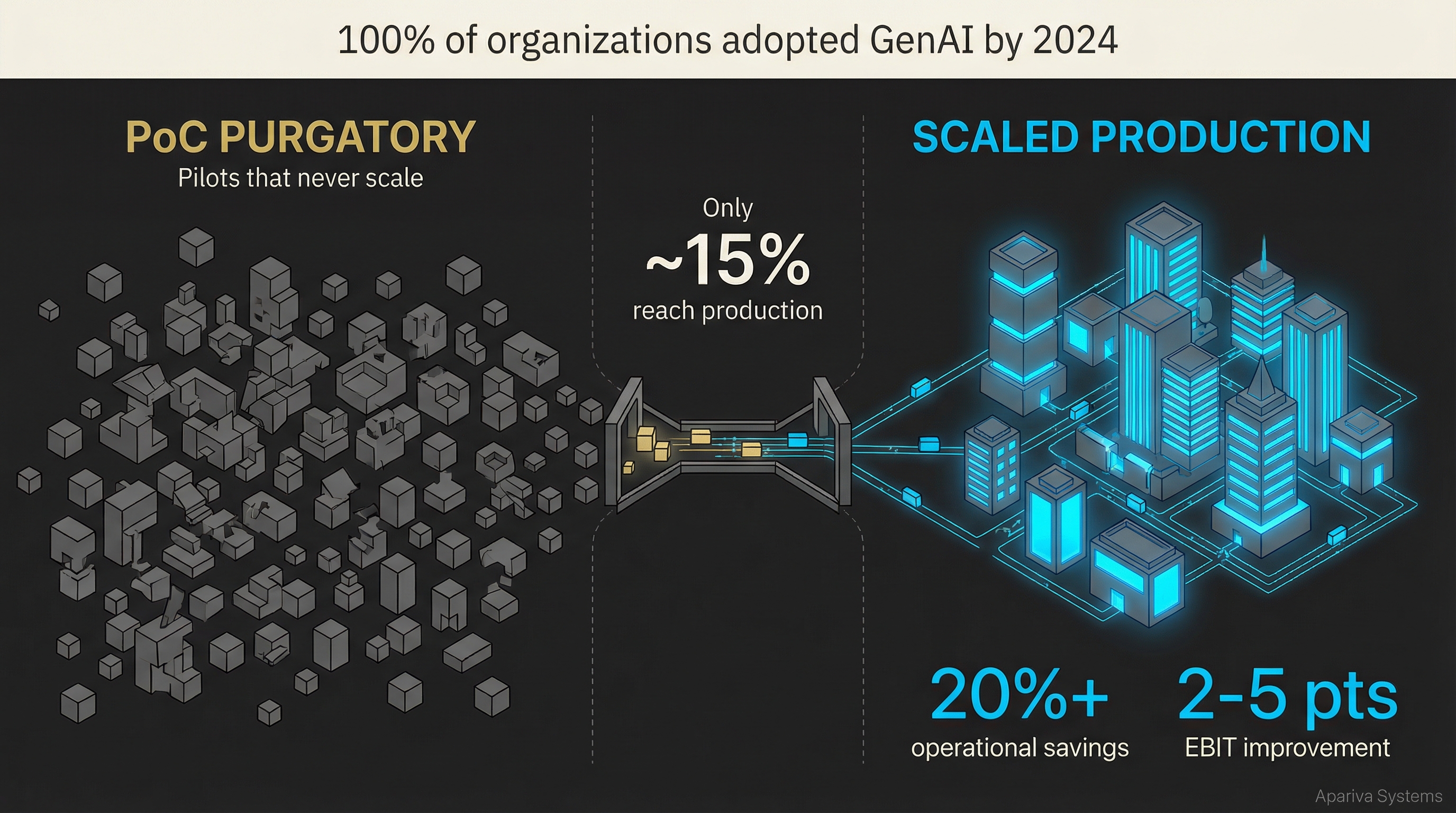

Yet this aggregate advantage masks a troubling reality: the gap between leading and lagging GCCs has widened to 5X across specific dimensions. In AI strategy and adoption, the spread between top-quartile and bottom-quartile performers exceeds 300%. Some GCCs are building autonomous AI systems that generate 20%+ operational savings and 2-5 percentage points of EBIT uplift. Others remain trapped in proof-of-concept purgatory, unable to move GenAI experiments from pilot to production.

What separates these populations isn't access to technology or capital. Every surveyed organization adopted GenAI in at least one function by 2024. The differentiator is something more fundamental: organizational consciousness about what AI transformation actually requires. Leading GCCs understand that AI maturity isn't achieved by deploying tools—it emerges from the interplay of strategy, talent, infrastructure, operating models, data governance, and change metabolism working as an integrated system.

This realization transforms the question from "Which AI platform should we buy?" to "Are we architected—culturally, operationally, technically—to support distributed intelligence at scale?" The answer demands honest assessment across six interconnected dimensions.

The 6-Dimension Framework: A Holistic View of AI Readiness

Apariva's GCC AI maturity framework consolidates industry research and our operational experience into six essential dimensions. Unlike generic maturity models that treat AI as another IT project, this framework recognizes that GCC AI readiness is fundamentally about organizational evolution—the capacity to transition from mechanistic cost arbitrage to living innovation systems.

Our six dimensions are:

1. Strategy & Culture – The intentionality directing evolution and the medium enabling ideas to propagate. This encompasses alignment with enterprise strategic priorities, clear AI vision and charter, cultural readiness for rapid experimentation, and executive sponsorship that transcends budget approval.

2. People – Moving beyond talent density to talent orchestration. This includes leadership competence in AI/ML domains, access to skilled resources from premier institutions, comprehensive upskilling programs, retention strategies, and the shift from individual genius to networked cognition.

3. Technology – Infrastructure as neural substrate for distributed intelligence. Cloud-native platforms, AI/ML tooling (vector databases, model registries, LLM orchestration), integration patterns for legacy systems, and continuous monitoring that enables learning and adaptation.

4. Operating Model – The transition from mechanical processes to adaptive systems. Governance structures (centralized, federated, hybrid), frameworks and SOPs that enable speed without chaos, measurement discipline, and continuous improvement mechanisms.

5. Data – The lifeblood of machine intelligence. Data quality, accessibility, governance, unified data fabrics versus fragmented silos, and the lineage and observability required to feed sophisticated ML models and LLM-based agents.

6. Adoption & Change Management – Scaling AI outcomes without organizational fragmentation. Moving from PoC to production, driving change without disruption, user adoption strategies, and the metabolic capacity to transform rather than merely augment.

These dimensions aren't independent variables—they form an interconnected system where strength in one area amplifies others, and weakness in any dimension constrains the whole. Think of them not as a checklist but as the essential nutrients for organizational evolution. A forest ecosystem requires sunlight, water, nutrients, microorganisms, genetic diversity, and time. Remove any element and the system degrades. Similarly, GCC AI maturity emerges from the dynamic interplay of all six dimensions working in concert.

This holistic view aligns with systems thinking: complex capabilities arise from network effects, not component excellence. A GCC with world-class AI talent but fragmented data infrastructure will underperform a GCC with good talent and unified data access. A GCC with sophisticated technology but risk-averse culture will lose to competitors with adequate technology and experimental mindsets.

The philosophical implication runs deeper than operational efficiency. As we enter the agentic AI era—where autonomous systems coordinate workflows, make decisions, and continuously improve—GCCs are evolving from human-managed cost centers to hybrid organizations where human and digital intelligences co-evolve. Assessing maturity across these six dimensions reveals whether your GCC is architected for this symbiosis or fighting against it.

Dimension 1 – Strategy & Culture: The Compass and the Soil

Strategy provides the intentionality that directs organizational evolution. Culture creates the medium in which new ideas either flourish or wither. Together, they form the foundational layer upon which all other dimensions rest—and research confirms that strategy exhibited the largest maturity gap between leaders and laggards across all measured dimensions.

Consider the contrast between two financial services GCCs, both with approximately 10,000 employees and access to identical AI technologies. The first operates under a clear directive: become the primary innovation engine for digital banking products globally. Goldman Sachs' India GCC exemplifies this model. Established in 2004 in Bangalore and expanded to Hyderabad in 2021, the center wasn't merely tasked with maintaining systems—it was mandated to build Marcus, Goldman's digital bank, from the ground up using AI-first architecture. The strategic clarity cascaded through every decision: talent acquisition focused on AI/ML expertise, infrastructure investments prioritized cloud-native platforms, operating models emphasized rapid iteration over rigid SLAs.

The cultural implications proved equally transformative. When leadership signals that the GCC is building the future rather than supporting the present, it attracts different talent and enables different behaviors. Engineers who might see traditional GCC work as stepping stones seek out these environments as destinations. Experimentation becomes expected rather than exceptional. Failure in pursuit of innovation earns recognition rather than reprimand. This culture produced tangible results: Gunjan Samtani, who led the GCC's engineering efforts, was elevated to COO of Engineering globally. Of Goldman Sachs' 2022 Managing Director cohort, 37 came from India—a testament to the center's strategic importance.

The second GCC, despite comparable resources, operates under fundamentally different strategic assumptions. Its mandate focuses on cost optimization and operational efficiency—valid objectives, but they create gravitational pull away from AI innovation. Every GenAI experiment requires business case justification before pilot approval. The innovation budget competes with cost reduction targets. Cultural messaging emphasizes risk mitigation over experimentation. Talented engineers join planning to exit for product companies. The GCC remains a cost center with AI tools rather than an AI-native innovation hub.

The strategic dimension encompasses several critical elements:

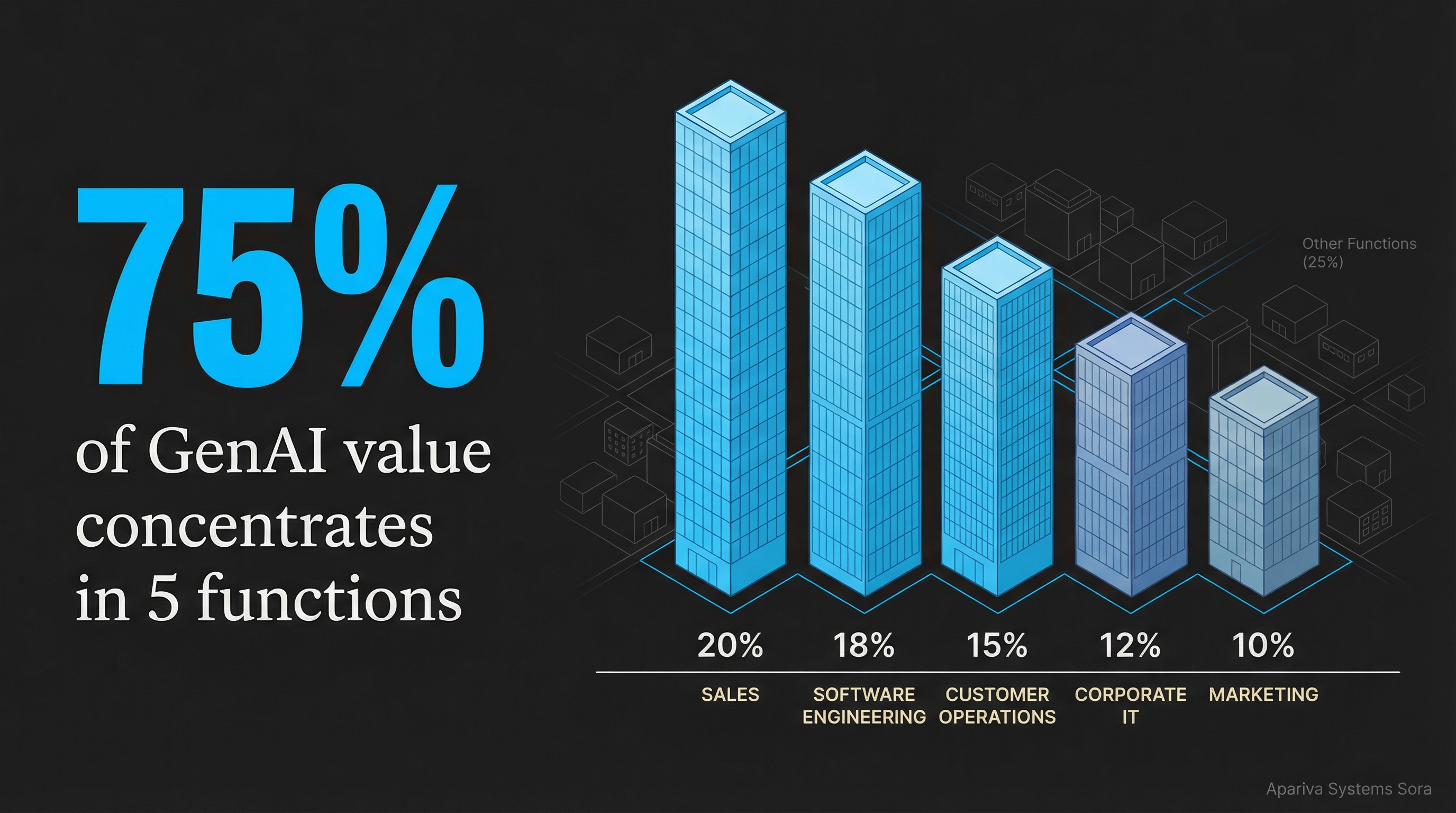

Alignment with Enterprise Priorities: Leading GCCs translate corporate AI strategy into actionable GCC roadmaps. They understand which enterprise functions offer the highest value for AI augmentation—research shows 75% of GenAI value concentrates in five functions: Sales, Software Engineering, Customer Operations, Corporate IT, and Marketing—and they prioritize accordingly.

Clear AI Vision and Charter: Vague directives like "explore AI opportunities" produce vague results. Specific charters—"Reduce customer service resolution time by 40% using AI-powered triage and response systems by Q4 2025"—create clarity that enables execution.

Cultural Readiness for Experimentation: Leading GCCs institutionalize experimentation through innovation time, internal hackathons, fast-fail frameworks, and celebration of learning from unsuccessful pilots. They recognize that AI innovation requires different risk tolerance than traditional IT operations.

Executive Sponsorship: C-suite engagement transcends budget approval. JPMorgan's decision to hold its Technology Innovation Forum in India for the first time in 14 years signaled strategic importance that cascaded through the organization. When the AI/ML technology leader for Asset & Wealth Management sits in the India GCC and reports directly to global leadership, the strategy becomes tangible.

The philosophical lens here reveals a deeper truth: strategy isn't a document or a planning process—it's the organization's consciousness about its purpose and future. Culture isn't ping-pong tables and casual Fridays—it's the collective belief system about what behaviors lead to success. In the context of AI transformation, these intangibles determine whether your GCC becomes a site of emergence—where new capabilities spontaneously arise from the interaction of talent, technology, and freedom to experiment—or a site of execution, mechanically implementing decisions made elsewhere.

The assessment question isn't "Do we have an AI strategy?" but rather "Does our strategy create conditions for AI-native innovation to emerge, and does our culture reward the behaviors that enable it?"

Dimension 2 – People: Beyond Talent Density to Talent Orchestration

The industrial-era model of talent management optimized for density: concentrate skilled workers, standardize their output, measure productivity by throughput. The AI era demands a fundamentally different approach: talent orchestration, where individual skills matter less than the patterns of collaboration, learning, and emergent intelligence arising from networked cognition.

JPMorgan's India GCC trajectory illustrates this evolution. From 46,000 FTEs in 2023 to a target of 57,000 by end of 2024, the expansion represents more than headcount growth. India now accounts for one-third of JPMorgan's global technology workforce—the largest operations and technology center globally at 2.3 million square feet. But the strategic significance lies in the roles these teams perform: leading the firm's public cloud migration, developing proprietary blockchain infrastructure (Onyx), and maintaining Product Owner responsibilities for critical systems—all roles that require coordination across distributed teams, rapid learning of emerging technologies, and autonomous decision-making authority.

This scale and scope is only possible through orchestration, not mere density. The GCC operates as a network of specialized centers of excellence: AI/ML capabilities in Bangalore, blockchain expertise in Mumbai, data engineering in Hyderabad. These nodes don't function independently—they form an interconnected system where insights from one domain inform innovations in another. A machine learning engineer working on fraud detection shares vector embedding techniques with a colleague building semantic search for legal document analysis. The compound learning effects exceed what any individual or isolated team could achieve.

The people dimension encompasses several critical capabilities:

Leadership with AI/ML Fluency: Traditional IT leadership focused on delivery predictability, SLA management, and cost control. AI-native leadership requires comfort with uncertainty, understanding of probabilistic systems, and ability to balance exploration with exploitation. When NatWest planned to hire 3,000+ digital and analytics talent by 2026 to augment its 14,000-person GCC (representing 50% of global digital workforce), the leadership imperative shifted from managing delivery teams to orchestrating innovation networks.

Access to Premier Talent Pools: India's concentration of IIT and NIT graduates provides structural advantage, but leading GCCs go further—they build direct pipelines through campus partnerships, internship programs, and sponsorship of research initiatives. The metric isn't just "How many IIT graduates did we hire?" but "What percentage of our new hires come through referrals from existing high performers?"—an indicator that top talent attracts more top talent.

Comprehensive Upskilling Programs: The half-life of technical skills in AI/ML is measured in months, not years. Leading GCCs invest 10-20% of budgets in continuous learning: partnerships with universities for advanced degrees, internal certification programs in specialized domains (prompt engineering, vector database optimization, LLM fine-tuning), and rotational assignments that build T-shaped expertise.

Retention through Purpose and Pathway: Annual attrition below 15% distinguishes leaders from laggards where 25%+ churn is common. But retention metrics obscure the deeper question: Are we retaining the people who matter most? Leading GCCs create clear pathways to enterprise leadership. When 37 of Goldman Sachs' 2022 Managing Directors came from India, it signaled that GCC tenure isn't a career detour—it's a competitive advantage for reaching top leadership.

Centers of Excellence as Knowledge Networks: Rather than embedding AI expertise diffusely across teams, leading GCCs establish dedicated CoEs with 100+ specialized practitioners. These CoEs don't operate as ivory tower research groups—they function as internal consultancies, pairing with business units to accelerate adoption while continuously learning from production deployments.

The philosophical shift underlying this dimension is profound. The age of the lone genius—the exceptional individual whose brilliance drives innovation—gives way to networked cognition, where breakthrough insights emerge from the interactions among many competent practitioners. This mirrors developments in AI itself: the transition from narrow AI systems (optimized for specific tasks) to large language models (trained on diverse data, capable of emergent behaviors not explicitly programmed).

GCCs architected for talent orchestration create conditions for similar emergence. They don't just hire smart people—they design environments where those people's collective intelligence compounds. The assessment question becomes: "Do our people systems create conditions for emergent, networked intelligence, or do they optimize for individual productivity in isolation?"

Dimension 3 – Technology: Infrastructure as Neural Substrate

In biological systems, the substrate matters immensely. Neural tissue's specific properties—electrical conductivity, synaptic plasticity, dense interconnection—enable cognition. Change the substrate and you change the cognitive potential. The same principle applies to technological infrastructure in GCCs: the platforms, tools, and architectural patterns don't merely enable AI work—they determine what kinds of intelligence can emerge.

Consider the legacy system challenge that constrains most GCCs. A financial services GCC inherits decades-old core banking systems, mainframe applications, and fragmented data warehouses. Each system has its own data model, access patterns, and integration protocols. Building an AI-powered customer service agent that needs real-time access to account balances, transaction history, product catalogs, and regulatory compliance rules becomes an integration nightmare. The AI model itself might be state-of-the-art, but the infrastructure substrate limits what it can learn and how quickly it can respond.

Leading GCCs approach infrastructure differently. Walmart's technology centers in Bangalore, Gurugram, and Chennai don't merely maintain existing systems—they build cloud-native architectures optimized for AI workloads from inception. This includes:

Cloud-Native Platforms: Moving beyond "lift and shift" migration to platform-native design. Compute scales elastically based on inference load. Storage optimizes for vector embeddings and time-series data. Networking minimizes latency for real-time AI decision systems. The infrastructure adapts to AI workload characteristics rather than forcing AI workloads to adapt to legacy constraints.

AI/ML Tooling Ecosystem: Leading GCCs standardize on integrated toolchains: vector databases for semantic search (Pinecone, Weaviate, Qdrant), model registries for version control and lineage (MLflow, Weights & Biases), LLM orchestration frameworks (LangChain, LlamaIndex, Mastra), and observability platforms that track not just system performance but model behavior and data drift.

Integration Patterns for Hybrid Environments: Recognizing that core systems can't be replaced overnight, sophisticated GCCs implement API layers that abstract legacy complexity, event-driven architectures that enable real-time data synchronization, and data virtualization that provides unified views across fragmented sources. The goal isn't to eliminate legacy systems immediately—it's to prevent them from constraining AI innovation.

Continuous Monitoring and Observability: Traditional IT monitoring tracks uptime, latency, and error rates. AI systems require deeper observability: model prediction accuracy over time, data drift detection, embedding quality metrics, prompt effectiveness tracking, and cost-per-inference monitoring. Leading GCCs instrument their systems to enable continuous learning and optimization.

The statistics validate this focus. Research shows that 75% of GenAI value creation concentrates in five functions: Sales, Software Engineering, Customer Operations, Corporate IT, and Marketing. Not coincidentally, these are the functions most amenable to cloud-native, API-first, data-intensive architectures. GCCs with legacy infrastructure constraints struggle to capture this value—they lack the substrate for these AI capabilities to flourish.

The philosophical metaphor here is mycelial networks in forest ecosystems. Fungi create vast underground networks that connect trees, enabling nutrient exchange, chemical signaling, and symbiotic relationships. Individual trees that appear isolated above ground are deeply interconnected below ground. The mycelium doesn't just transport resources—it enables collective intelligence that helps forests respond to threats, share resources from healthy trees to struggling ones, and coordinate reproductive timing.

Similarly, the right technology infrastructure in GCCs enables information and intelligence to flow across organizational boundaries. A data scientist building a forecasting model can access cleaned, governed data from multiple sources without requesting permissions or writing integration code. A product team can deploy a GenAI feature without waiting for infrastructure provisioning. An AI agent monitoring customer interactions can trigger automated workflows across CRM, ERP, and support ticketing systems. The substrate enables these connections; without it, each capability remains isolated.

The assessment question: "Does our infrastructure enable AI capabilities to emerge and interconnect organically, or does it create friction that limits what we can build and how quickly we can learn?"

Dimension 4 – Operating Model: From Processes to Living Systems

The industrial era gave us the assembly line: mechanistic processes optimized for repeatability, efficiency, and quality control. Workers performed standardized tasks in sequence. Variation was waste. The operating model maximized throughput while minimizing deviation. This thinking dominated IT operations for decades, manifesting in ITIL frameworks, SLA-driven contracts, and waterfall development processes.

AI-era GCCs require fundamentally different operating models—ones that optimize for adaptation, learning, and resilience rather than mechanical efficiency. The shift mirrors the difference between a factory assembly line and an ant colony. Assembly lines stop when a component fails or the process needs reconfiguration. Ant colonies adapt: when the food source moves, foraging patterns reorganize; when a predator appears, defensive behaviors emerge; when the queen dies, workers can develop reproductive capacity. The colony exhibits behaviors not programmed into individual ants—they emerge from the operating model encoded in pheromone trails, role divisions, and feedback loops.

Walmart's GCC evolution demonstrates this transition. Initially focused on cost-effective IT operations with rigid SLAs and change control processes, the centers shifted to agile development, DevOps practices, and continuous delivery as the mandate expanded to product development and supply chain innovation. The operating model needed to support rapid experimentation: multiple hypothesis tests per week, A/B testing at scale, canary deployments that minimize risk while enabling learning. Growing from 1,000 FTEs in 2016 to a target of 12,000 by 2024 required operating models that could scale without ossifying.

The operating model dimension encompasses several critical elements:

Governance Structure: Leading GCCs adopt hybrid governance—centralized for architecture standards, security, and data governance; federated for use case prioritization, implementation approaches, and innovation experimentation. This balance enables consistency without stifling autonomy. A pure centralized model bottlenecks at approval processes. A pure federated model creates fragmentation and duplicated effort. The hybrid approach creates "freedom within a framework."

Frameworks and SOPs that Enable Speed: Paradoxically, the right structure accelerates rather than constrains. Mature GCCs develop AI project templates, pre-approved technology stacks, automated compliance checks, and standardized deployment pipelines. These frameworks eliminate repetitive decisions, codify best practices, and reduce cognitive load on teams. Speed comes from not having to reinvent approaches for every project.

Measurement Discipline: "What gets measured gets managed" applies, but the metrics matter enormously. Lagging GCCs measure activity: number of AI PoCs launched, ML models developed, training programs conducted. Leading GCCs measure outcomes: productivity improvements from AI augmentation, cycle time reduction, defect rates, adoption rates, and business impact metrics like revenue uplift or cost savings. The goal isn't to "do AI"—it's to create measurable value.

Continuous Improvement Mechanisms: The operating model itself must evolve. Leading GCCs institute regular retrospectives, A/B test operating model changes, maintain runbooks that capture lessons from incidents, and create feedback loops from production systems to development processes. When State Street positioned its India CEO to report directly to the Global COO and grew India to 50%+ of total headcount, the organizational structure enabled rapid feedback from execution to strategy.

The 3-5X maturity spread identified across dimensions stems largely from operating model sophistication. Leaders have institutionalized mechanisms for sensing market changes, experimenting with responses, learning from results, and scaling successful approaches. Laggards operate reactively: initiatives launch when executives mandate them, stop when enthusiasm wanes, and lack systematic learning capture.

The philosophical insight: mechanical systems optimize for stability, returning to equilibrium when disturbed. Living systems optimize for adaptation, evolving in response to environmental pressures. Industrial-era operating models were mechanical by design—they maintained stability in predictable environments. AI-era operating models must be adaptive—they enable evolution in response to rapidly changing technology landscapes, competitive dynamics, and organizational learning.

The assessment question: "Does our operating model enable adaptive responses to change, or does it enforce mechanical repetition of processes designed for a different era?"

Dimension 5 – Data: The Lifeblood of Machine Intelligence

In biological organisms, blood circulation serves multiple functions: delivers oxygen and nutrients, removes metabolic waste, distributes hormones and immune cells, regulates temperature. Without circulation, even oxygen-rich environments and nutrient-rich diets cannot sustain life. The resources exist but cannot reach where they're needed.

Data plays an analogous role in AI-native organizations. Without quality data flowing to where decisions occur—whether those decisions are made by humans, algorithms, or increasingly by autonomous AI agents—intelligence cannot emerge. Yet most GCCs inherit data landscapes architected for a different era: fragmented across business units, siloed in application-specific databases, inconsistently governed, and optimized for transactional processing rather than analytical insight.

The challenge manifests in concrete ways. A financial services GCC builds a sophisticated fraud detection model using transaction data from the core banking system. The model achieves 95% accuracy in testing. But deploying it to production reveals that customer profile data sits in a separate CRM system with different update cadences, merchant category codes are maintained by yet another team with conflicting taxonomies, and geolocation data requires API calls to third-party services with variable latency. The model's sophistication becomes irrelevant—the data substrate cannot support the required real-time inference patterns.

Leading GCCs address this through comprehensive data strategies:

Unified Data Fabrics: Rather than attempting to consolidate all data into centralized warehouses (an approach that fails due to scale, sovereignty requirements, and organizational politics), sophisticated GCCs implement logical data fabrics. These provide unified query interfaces across distributed data sources, abstract storage heterogeneity, enforce consistent governance policies, and optimize for access patterns required by AI workloads. Users and systems query the fabric; it handles the complexity of routing, transformation, and federation.

Data Quality and Lineage: Machine learning systems amplify data quality issues. A 1% error rate in training data can produce models that fail 30% of the time in edge cases. Leading GCCs invest in data quality frameworks: automated validation pipelines, lineage tracking from source systems through transformations to consumption points, data contracts that specify schemas and quality guarantees, and observability that detects drift before it degrades model performance.

Governance Without Bureaucracy: Data governance often implies approval committees, access request forms, and weeks-long provisioning cycles. Modern approaches flip this model: default to accessible with automated enforcement of privacy, security, and compliance rules. Rather than requesting access to customer data and waiting for approval, a data scientist accesses a production-safe synthetic dataset with identical statistical properties. Rather than debating who owns a data element, governance frameworks specify clear resolution processes and accountability.

Optimized Storage for AI Workloads: Transactional databases optimize for write consistency and read latency on row lookups. AI workloads require different patterns: bulk reads for training datasets, vector similarity search for embeddings, time-series optimization for sequential data, and graph structures for relationship-heavy domains. Leading GCCs deploy specialized storage: vector databases (Pinecone, Weaviate, Qdrant) for semantic search, time-series databases (InfluxDB, TimescaleDB) for sensor and event data, and graph databases (Neo4j, TigerGraph) for network analysis.

The statistics underscore the importance: GCCs that implemented unified data strategies reported 2-3X faster time to production for new AI use cases compared to those with fragmented data landscapes. The reduction comes not from faster model development—that's largely commoditized through transfer learning and pre-trained models—but from eliminating weeks or months spent on data access, cleaning, and integration.

But beyond operational efficiency, data quality and accessibility create compound learning effects. In traditional software systems, each application's value is relatively independent. In AI systems, the value compounds: data from customer service interactions improves product recommendations; product usage data enhances fraud detection; fraud patterns inform compliance automation. Every data source potentially enriches multiple AI applications, but only if the data is accessible, trusted, and interconnected.

The philosophical perspective: data is to AI systems what nutrients are to biological systems—essential but not sufficient alone. Even nutrient-rich soil cannot sustain growth without water to dissolve nutrients and circulation to distribute them. Similarly, high-quality data cannot enable intelligence without the infrastructure to move it, the governance to trust it, and the accessibility to apply it. The assessment evaluates not just data existence but data metabolism: the organizational capacity to sense, circulate, filter, and apply data where it creates value.

The assessment question: "Does our data infrastructure enable compound learning effects, where each new data source amplifies the value of existing AI capabilities, or do data silos constrain each AI system to isolated, independent value creation?"

Dimension 6 – Adoption & Change Management: Scaling Without Fragmentation

A common pattern emerges across GCCs attempting AI transformation: impressive pilots that never reach production. A GenAI-powered contract analysis system achieves 90% accuracy in testing and promises 60% reduction in legal review time. Leadership celebrates. Then the system encounters production realities: lawyers don't trust AI recommendations without seeing source citations; the system integrates poorly with existing contract management workflows; edge cases not represented in training data create embarrassing failures; and the legal team already operates at capacity, with no time to provide feedback for model improvement.

The pilot demonstrated technical feasibility. But adoption requires something more fundamental: organizational metabolism that can integrate new capabilities without rejection. The biological metaphor is apt. When organisms consume nutrients, digestion breaks them down, the circulatory system distributes them, and cellular metabolism incorporates them into new tissue. The organism transforms through this process—it doesn't simply add nutrients on top of existing structure. Failed adoption attempts are like indigestion: the new capability sits unintegrated, creating discomfort rather than nourishment.

Research quantifies this challenge: 100% of surveyed organizations adopted GenAI in at least one function by 2024, but the gap between pilot and production remains the primary barrier to value capture. Yet the potential rewards are substantial: 20%+ operational savings and 2-5 percentage points of EBIT improvement for organizations that achieve scaled adoption. The difference between leaders and laggards isn't technology sophistication—it's change metabolism.

Leading GCCs approach adoption and change management through several mechanisms:

Moving from PoC to Production as Core Competency: Rather than treating each AI deployment as a custom project, mature GCCs develop production-ization playbooks: standardized architecture patterns that address common integration challenges, pre-defined security and compliance review processes, established training and change management curricula, and dedicated transition teams that bridge from innovation to operations. The goal is to make production deployment as repeatable as pilot launches.

User-Centered Design and Co-Creation: The contract analysis system failed not because of technical limitations but because it was designed in isolation from user workflows. Leading GCCs embed business users throughout development: co-creating requirements, participating in iterative testing, providing domain expertise that shapes model behavior, and becoming champions for adoption within their organizations. When users feel ownership rather than imposition, adoption accelerates.

Gradual Rollout with Feedback Loops: Rather than big-bang deployments, sophisticated GCCs implement AI capabilities incrementally: initial deployment to a small, high-engagement user group; rapid iteration based on actual usage patterns and feedback; expansion to broader audiences only after demonstrating value; and continuous monitoring that detects adoption friction before it becomes failure. This approach minimizes risk while maximizing learning.

Organizational Change Without Disruption: AI adoption often triggers anxiety about job displacement, skill obsolescence, and role changes. Leaders address this proactively: defining clear principles about AI augmentation versus replacement, investing in reskilling programs that prepare people for AI-augmented roles, celebrating human-AI collaboration successes, and ensuring transparent communication about strategic intent. When Barclays positioned its India GCC for blockchain and distributed ledger innovation, success required not just technical capability but helping engineers see these emerging technologies as career opportunities rather than threats.

Measurement and Incentive Alignment: Adoption failures often stem from misaligned incentives. If operational teams are measured on error reduction and AI systems introduce new error modes, resistance is rational. If individual contributors are evaluated on personal productivity and AI tools require time investment to learn, adoption stalls. Leading GCCs align incentives: team-level metrics that reward AI-augmented outcomes, innovation time that legitimizes experimentation, and career pathways that recognize AI fluency as advancement criteria.

The statistics validate these approaches. GCCs with dedicated change management practices achieved 3-5X higher production adoption rates than those treating adoption as an afterthought. NatWest's plan to grow its 14,000-person GCC (already 50% of global digital workforce) by adding 3,000+ digital and analytics roles by 2026 depends on absorbing this talent without fragmenting into isolated innovation islands. The operating model must metabolize continuous capability expansion.

The philosophical insight extends the earlier biological metaphor: transformation is metabolic, not additive. Organizations don't become AI-native by adding AI tools to existing structures—they transform by integrating AI capabilities so deeply that the distinction between "AI work" and "work" dissolves. Just as you don't think about which organs are processing the food you ate, users in AI-native organizations don't think about which tasks use AI versus human intelligence—they simply accomplish objectives using whatever mix of human and machine capabilities proves most effective.

This metabolic view of change management represents a profound shift from traditional change management frameworks that treat change as projects with beginning, middle, and end states. In AI-native organizations, change is continuous—models retrain on new data, capabilities expand through emergent behaviors, and user expectations evolve based on experiences. The organizational metabolism must support perpetual transformation.

The assessment question: "Does our organization metabolize new AI capabilities—integrating them seamlessly into workflows and continuously adapting—or do AI initiatives exist as unintegrated add-ons that create overhead rather than value?"

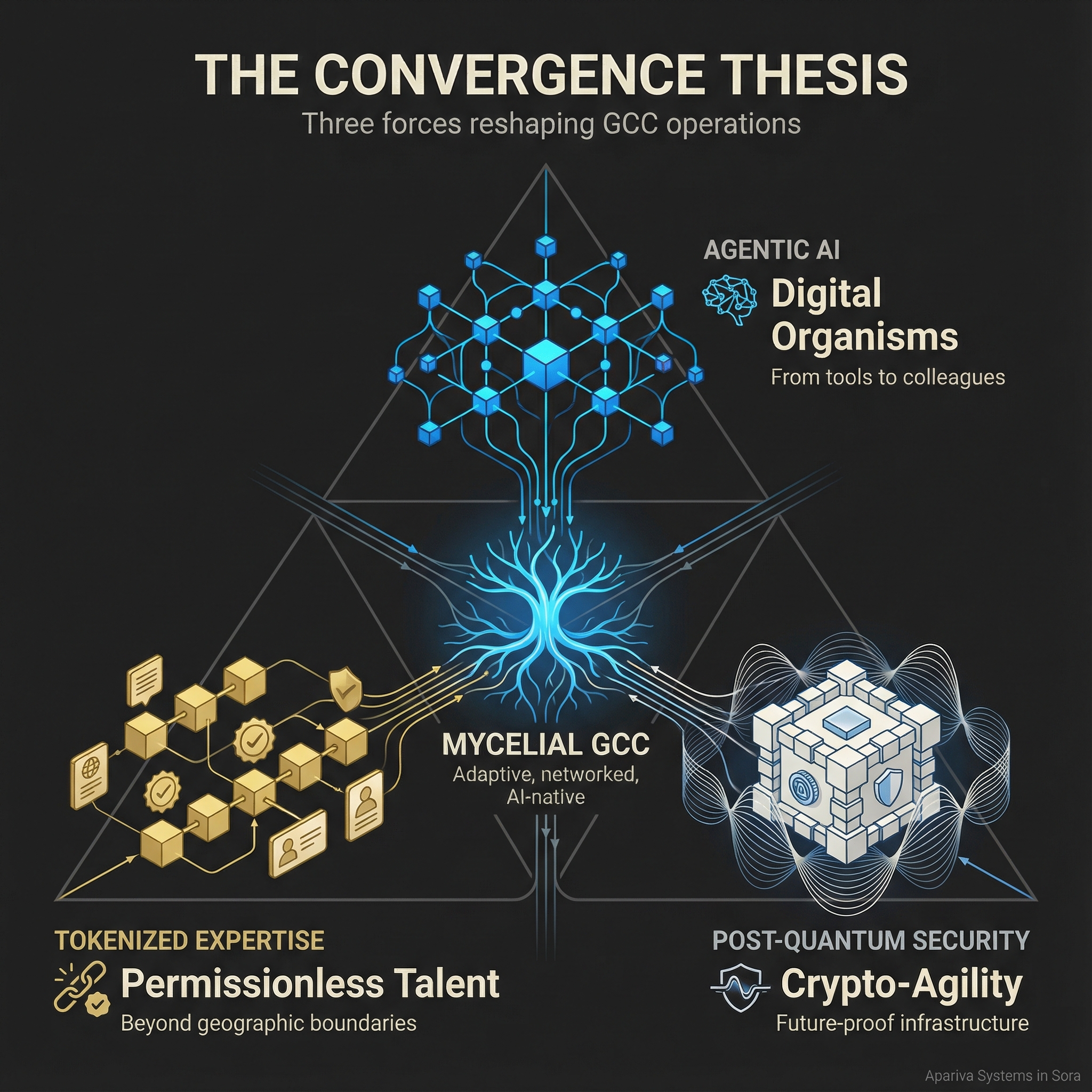

The Convergence Thesis: AI, Tokenization, and Post-Quantum GCC Operations

The six-dimension framework addresses current-state AI maturity, but a deeper transformation approaches. Three technological convergences will fundamentally reshape what GCCs are and how they operate: agentic AI systems that function as autonomous digital organisms, tokenized expertise and blockchain-verified credentials enabling permissionless global talent networks, and post-quantum computing creating both vulnerabilities and opportunities in distributed operations.

Agentic AI: From Tools to Digital Organisms

The GenAI systems most GCCs deploy today respond to prompts but lack agency. They analyze when asked, generate when instructed, and classify when directed. The next wave of AI systems exhibits genuine agency: autonomously identifying opportunities, coordinating multi-step workflows, learning from outcomes, and adapting strategies based on results.

Consider the implications for GCC operations. Rather than engineers maintaining customer service chatbots, AI agents monitor service quality, identify emerging issue patterns, generate and test response improvements, coordinate with product development agents when systemic fixes are needed, and continuously optimize based on satisfaction signals. The GCC's role shifts from building and maintaining AI tools to cultivating AI agents—creating environments where digital organisms can evolve productive behaviors.

This isn't science fiction. Anthropic's Claude and OpenAI's GPT models already demonstrate emergent capabilities not explicitly programmed: mathematical reasoning, code generation in languages not heavily represented in training data, and theory of mind that enables social reasoning. As these capabilities mature and agents gain persistent memory, access to tools and APIs, and the ability to coordinate with other agents, GCC operations will increasingly involve human-AI teams where the distinction between human employees and digital colleagues blurs.

The maturity assessment shifts accordingly: it's not enough to ask "Can our people use AI tools effectively?" The question becomes "Can our organization cultivate beneficial AI agent behaviors and maintain alignment between agent objectives and human values?"

Tokenized Expertise: Permissionless Global Talent

Today's GCC model depends on geography-specific advantages: lower labor costs in India, talent concentration in specific cities, and organizational boundaries that define who can contribute. Blockchain-based credential systems and tokenized expertise networks will erode these assumptions.

Imagine verified skill credentials on tamper-proof blockchains: a developer's contributions to open source projects create verifiable reputation tokens; specialized AI expertise earns certification NFTs from recognized institutions; successful project deliveries generate on-chain references that future clients can verify without trusting self-reported resumes. This infrastructure enables truly permissionless talent markets where contribution opportunity depends on demonstrated capability rather than geographic location, institutional affiliation, or employment status.

For GCCs, this creates both opportunity and threat. The opportunity: access global expertise without geographic constraints, compose project teams dynamically based on verified capabilities, and enable fluid transitions between full-time, contract, and gig contributors. The threat: geographic labor cost advantages diminish when talent is globally accessible, incumbent advantages from local presence erode, and retaining top talent becomes harder when opportunities are globally visible and frictionless.

Leading GCCs will adapt by shifting value propositions from cost arbitrage to capability cultivation—becoming known as places where expertise develops, challenging problems get solved, and reputation tokens accumulate. The maturity assessment incorporates readiness for this shift: "Are we building portable expertise and verifiable reputation for our teams, or does our value proposition depend entirely on geography-specific arbitrage that blockchain-enabled talent markets will eliminate?"

Post-Quantum Preparedness: Security Beyond Classical Cryptography

Quantum computing advances create a looming vulnerability for GCC operations. Most contemporary encryption—the foundation for secure data transmission, authentication, and blockchain systems—depends on mathematical problems that quantum computers can solve exponentially faster than classical computers. When sufficiently powerful quantum computers emerge (estimates range from 5-15 years), much existing cryptographic infrastructure becomes vulnerable.

GCCs managing sensitive financial data, operating blockchain-based systems, or maintaining distributed trust mechanisms face acute post-quantum risks. But the same quantum properties that threaten classical encryption enable new paradigms: quantum key distribution for provably secure communication, quantum-resistant cryptographic algorithms already under development, and quantum computing applications in optimization, drug discovery, and financial modeling.

The convergence becomes apparent: agentic AI systems coordinating across GCC networks will require post-quantum secure communication; tokenized expertise networks will need quantum-resistant blockchain infrastructure; and GCCs that develop quantum computing capabilities (even if initially through cloud access to quantum processors) gain advantages in optimization problems central to supply chain, portfolio management, and logistics operations.

The maturity assessment must incorporate forward-looking dimensions: "Are we architecting systems with crypto-agility so encryption algorithms can be swapped without application-layer changes? Are we monitoring quantum computing developments relevant to our domains? Are we building internal expertise in post-quantum cryptography?"

The Mycelial Future

These convergences suggest GCCs evolving from organizational units—bounded entities with clear inside/outside distinctions—toward mycelial networks: distributed, adaptive systems where value flows along gradients, resources move toward where they create most impact, and intelligence emerges from network effects rather than hierarchical planning.

In forest ecosystems, mycorrhizal networks connect trees across species, enabling older trees to support younger ones, sharing nutrients based on need rather than individual ownership. The network exhibits intelligence: responding to threats, allocating resources, and maintaining ecosystem health through distributed coordination without central control.

Future GCCs may operate similarly: autonomous AI agents coordinating across organizational boundaries, human expertise flowing toward highest-value problems through tokenized reputation systems, secure coordination enabled by post-quantum cryptographic infrastructure, and value accruing to networks that enable emergence rather than organizations that enforce boundaries.

This vision extends well beyond current maturity frameworks, but the direction matters for present strategy. GCCs optimizing solely for current-state efficiency may find themselves obsolete as these convergences reshape the landscape. Those building foundations for adaptive, networked, AI-native operations position themselves for continued relevance regardless of how rapidly the future arrives.

How to Use This Framework: From Assessment to Action

Understanding the six dimensions provides conceptual clarity, but translating that understanding into organizational improvement requires systematic assessment and prioritized action. Based on work with dozens of GCCs across financial services, technology, and healthcare sectors, we've developed a practical methodology for applying this framework.

Step 1: Conduct Comprehensive Self-Assessment

Begin with honest baseline measurement across all six dimensions. For each dimension, evaluate current state across four maturity levels:

- Emergent (1-2 score): Ad-hoc initiatives, no systematic approach, limited leadership awareness

- Developing (3-4 score): Pilot programs initiated, growing awareness, inconsistent execution

- Advancing (5-6 score): Scaled deployments, established practices, measurable outcomes

- Leading (7-8 score): Continuous optimization, industry-leading practices, strategic advantage

Gather evidence from multiple sources: leadership interviews, employee surveys, technology audits, operational metrics, and external benchmarking. Resist the temptation to inflate scores—honest assessment creates foundation for meaningful improvement.

Example assessment questions:

Strategy & Culture: Does the GCC have a documented AI vision aligned with enterprise priorities? Do innovation budgets represent 10%+ of total budget? Do cultural norms reward experimentation or punish failure?

People: What percentage of new hires come from premier institutions? Is annual attrition below 15%? Do clear pathways exist from GCC roles to enterprise leadership?

Technology: Are core systems cloud-native or legacy? Do standardized AI/ML toolchains exist? Can teams deploy models to production in days versus months?

Operating Model: Is governance centralized, federated, or hybrid? Do standardized frameworks exist for common AI workflows? Are teams measured on outcomes or activity?

Data: Can data scientists access production-safe datasets without multi-week approval processes? Does lineage tracking exist from source to consumption? Are there unified query interfaces across data silos?

Adoption & Change: What percentage of AI pilots reach production? Do user-centered design practices inform AI development? Are change management resources dedicated to each deployment?

Step 2: Identify Priority Gap Dimensions

Most GCCs cannot address all six dimensions simultaneously. Prioritize based on:

- Largest gaps: Dimensions scoring 3+ points below others create bottlenecks limiting overall maturity

- Highest impact: Based on McKinsey data, Strategy, Technology, and Adoption typically offer highest ROI

- Organizational readiness: Some dimensions require prerequisite capabilities (e.g., advanced AI adoption requires sufficient data quality)

- Leadership alignment: Prioritize dimensions where executive sponsorship is strongest

Select 2-3 dimensions for focused improvement over the next 6-12 months. Attempting to address all six simultaneously dilutes resources and creates change fatigue.

Step 3: Define 90-Day Improvement Sprints

Structure improvement as focused sprints with tangible deliverables:

Sprint Example – Technology Dimension:

- Weeks 1-2: Inventory existing AI/ML infrastructure, identify integration gaps, benchmark against cloud-native reference architectures

- Weeks 3-6: Design unified ML platform architecture, select vendor partners, secure budget approval

- Weeks 7-10: Implement core platform components (model registry, vector database, orchestration layer)

- Weeks 11-12: Onboard pilot team, migrate one existing use case to new platform, measure improvement in deployment velocity

Sprint Example – Adoption Dimension:

- Weeks 1-2: Identify top 3 AI pilots stuck in "PoC purgatory," conduct user research to understand adoption barriers

- Weeks 3-6: Redesign user experience based on feedback, implement gradual rollout strategy, establish feedback mechanisms

- Weeks 7-10: Execute rollout to expanded user base, provide training and change support, monitor adoption metrics

- Weeks 11-12: Analyze results, document lessons learned, update production-ization playbook for future deployments

The 90-day timeframe creates urgency without overwhelming teams, allows course correction before significant resource investment, and generates momentum through visible progress.

Step 4: Establish Measurement Cadence

Define specific KPIs for each prioritized dimension and track monthly:

- Strategy: AI investment as % of total budget, alignment score from leadership survey

- People: AI/ML skill penetration rate, attrition among AI specialists, referral hire percentage

- Technology: Deployment velocity (days from model development to production), infrastructure reliability, cost per inference

- Operating Model: Approval cycle time, PoC to production conversion rate, governance overhead

- Data: Data access time, quality incident frequency, data scientist productivity

- Adoption: Production deployment rate, user satisfaction scores, business impact metrics

Review progress quarterly with executive sponsors, celebrate wins, diagnose stalls, and adjust priorities based on learnings.

Step 5: Scale and Integrate

As initial sprints demonstrate results, expand improvements across the organization:

- Codify successful practices into standard operating procedures

- Extend training programs to broader populations

- Allocate sustained funding for capability development

- Integrate maturity assessment into annual strategic planning

The goal isn't to "finish" maturity improvement—in rapidly evolving domains like AI, maturity is continuous adaptation rather than achievement of end state. Leading GCCs institutionalize maturity assessment as ongoing organizational practice.

Connection to Apariva's Services

Apariva specializes in accelerating GCC AI maturity through:

- Comprehensive Maturity Assessments: We conduct detailed evaluations across all six dimensions, benchmark against industry leaders, and provide actionable roadmaps with prioritized initiatives

- Implementation Partnership: Our teams integrate with your GCC to execute improvement sprints, transfer knowledge, and build internal capabilities

- Ongoing Advisory: We provide continuous guidance as AI landscapes evolve, helping you adapt strategies and maintain competitive advantage

If your GCC is ready to move from assessment to action, we invite you to schedule a complimentary maturity assessment consultation to explore how these frameworks apply to your specific context.

Key Takeaways: The Path from Cost Center to Innovation Organism

As GCCs navigate the transition from cost arbitrage centers to AI-native innovation hubs, six interconnected dimensions determine success:

Strategy & Culture provides the compass and soil: Clear AI vision aligned with enterprise priorities, cultural permission to experiment and learn from failure, and executive sponsorship that transcends budget approval create conditions for innovation to emerge rather than requiring it to be mandated.

People orchestration exceeds talent density: Access to premier technical talent matters, but networked cognition—the ability to create compound learning effects across distributed teams—separates leaders from laggards. The age of individual genius gives way to collective intelligence.

Technology infrastructure functions as neural substrate: Cloud-native platforms, AI-optimized toolchains, and integration patterns that abstract legacy constraints determine what kinds of intelligence can emerge. Like mycelial networks in forests, the right infrastructure enables organic connection and nutrient flow.

Operating models must evolve from mechanical to adaptive: Industrial-era processes optimized for repeatability fail in AI contexts that require continuous learning and adaptation. The shift from assembly-line thinking to ant-colony thinking enables resilience and emergent coordination.

Data quality and accessibility create compound learning: Each new data source potentially amplifies multiple AI applications, but only if data flows freely, maintains quality, and operates under governance frameworks that enable access without compromising security or compliance.

Adoption is metabolic, not additive: Organizations become AI-native not by adding AI tools atop existing processes but by integrating capabilities so deeply that the distinction between AI work and work dissolves. Change management becomes change metabolism.

The 5X maturity gap identified between leading and lagging GCCs stems not from technology access—all surveyed organizations adopted GenAI in at least one function by 2024—but from organizational readiness across these six dimensions working as an integrated system. Leaders don't excel in one or two dimensions; they demonstrate strength across all six and understand the network effects that emerge from their interplay.

Looking forward, GCCs face convergences that will reshape operations fundamentally: agentic AI systems functioning as autonomous digital organisms, tokenized expertise enabling permissionless global talent networks, and post-quantum computing creating both vulnerabilities and opportunities. The maturity frameworks that guide current transformation must evolve to address these emerging paradigms.

Yet the philosophical foundation remains constant: organizations, like biological systems, must evolve to survive in changing environments. The six dimensions represent the essential nutrients for this evolution—remove any element and the system degrades. GCCs conducting honest maturity assessments, prioritizing critical gaps, and executing disciplined improvement programs position themselves not just for current AI adoption but for continued relevance as the definition of "AI-native" itself evolves.

What's Next: A Call to Consciousness

The concept of Aparoksha—direct, unmediated knowledge beyond intellectual understanding—originated in ancient Indian philosophy. It describes the difference between knowing about something and knowing it directly through experience. A medical student knows about anatomy through textbooks. A surgeon knows anatomy through direct engagement—the resistance of tissue, the patterns of vasculature, the variations between patients.

Similarly, you can know about your GCC's AI maturity through reports, presentations, and executive summaries. But direct consciousness—the unmediated awareness of where you truly stand across these six dimensions—requires honest assessment, uncomfortable conversations, and willingness to confront gaps between aspiration and reality.

This consciousness isn't merely strategic—it's existential. Organizations that lack direct awareness of their evolutionary readiness while competitors develop it face displacement, not through dramatic disruption but through gradual irrelevance. The AI maturity gap compounds: leaders pull further ahead as their AI capabilities generate data that improves future AI capabilities, while laggards' stagnant capabilities yield stagnant data that perpetuates stagnation.

But consciousness alone doesn't create transformation. It creates the foundation for intentional evolution. GCCs that honestly assess their current state, understand the dimensions that determine AI-native maturity, prioritize critical gaps, and execute disciplined improvement programs can accelerate their evolution regardless of starting point.

If your GCC leadership team is ready to move beyond knowing about AI maturity to achieving direct consciousness of where you stand and what transformation requires, we invite you to engage:

Download our comprehensive GCC AI Maturity Self-Assessment Tool to evaluate your organization across all six dimensions with detailed scoring rubrics and industry benchmarks.

Schedule a complimentary 30-minute maturity assessment consultation with Apariva's GCC practice leaders to explore how this framework applies to your specific context and challenges.

Join the conversation on LinkedIn by sharing your GCC's AI maturity journey, the dimensions where you're seeing the greatest gains or facing the biggest challenges, and tag us @AparivaSystems to connect with a community of leaders navigating similar transformations.

The path from cost center to innovation organism isn't traveled alone. It requires partners who understand both the technical complexities of AI implementation and the organizational dynamics of transformation at scale. Apariva exists to accelerate this journey—not as vendors selling products but as thought partners committed to your success in the AI era.

The question isn't whether your GCC will transform—the competitive dynamics and technological trajectories make transformation inevitable. The question is whether you'll drive that transformation intentionally, with clear awareness and strategic purpose, or experience it reactively, shaped by forces you don't fully understand.

We invite you to choose consciousness. Choose intentional evolution. Choose to measure your readiness honestly, address your gaps systematically, and build the organizational capabilities that will define competitive advantage for the decade ahead.

Your GCC's future as an innovation organism rather than a cost center begins with direct awareness of where you stand today. Let's begin that assessment together.